AI, Cloud Computing, Big Data & IoT - Key to a Smarter Future

AI, Cloud Computing, Big Data & IoT - Key to a Smarter Future

|

| AI, Cloud Computing, Big Data & IoT |

Introduction

Artificial Intelligence (AI)

Different type of AI

General AI

Super AI

The concept of artificial super intelligence (ASI) carries immense implications for mankind, including the possibility of its complete annihilation. While this idea may resemble a narrative extracted from the pages of a science fiction novel, it does hold some validity: ASI denotes a system where machine intelligence surpasses human intellect in every aspect, outperforming humans in all functions.

Major Branches of AI

History of Artificial Intelligence

10 Advantages of using Artificial Intelligence

- Displaying cognitive abilities

- Absorb lessons from past encounters

- Utilize information gained through experience

- Tackle intricate scenarios

- Find solutions in the absence of key data

- Identify crucial elements

- Respond promptly and accurately to novel circumstances

- Interpret visual representations

- Manage and interpret symbols

- Demonstrate creativity and innovation

- Employ problem-solving strategies

5 Disadvantages of Artificial Intelligence

- Expensive prices

- Lack of innovation

- Joblessness

- Encourage human idleness

- Lack of moral principles

- Cold-hearted

- Stagnation

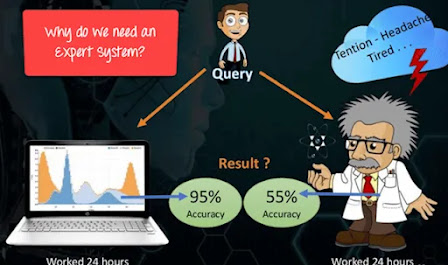

Expert Systems

Expert System Structure & Components

3 components of Expert System:

5 Applications of Expert System:-

10 Advantages of using an an expert system:

- Enhances the quality of decision-making.

- Economical by reducing the need for consulting human experts in issue resolution.

- Delivers quick and reliable solutions to intricate problems within a particular field.

- Acquires and utilizes scarce knowledge effectively.

- Ensures uniformity in addressing recurring problems.

- Retains a substantial amount of information.

- Furnishes prompt and precise responses.

- Offers thorough explanations for decision-making.

- Resolves complex and demanding issues.

- Operates consistently without experiencing fatigue.

5 Disadvantages of Expert System

- Incapable of making decisions in exceptional circumstances.

- The principle of Garbage-in Garbage-out (GIGO) applies here, meaning that if there is an error in the knowledge base, we will inevitably receive incorrect decisions.

- The maintenance expenses are higher.

- Every problem is unique, and expert systems have certain limitations when it comes to solving diverse problems. In such instances, a human expert demonstrates greater creativity.

CLOUD COMPUTING

What is Cloud?

What is Cloud Computing?

|

| What is Cloud Computing? |

Cloud Computing Architecture

|

| Cloud Computing Architecture |

10 ARCHITECTURE OF CLOUD COMPUTING

Infrastructure Layer:

Platform Layer:

Application Layer:

Deployment Models:

Service Models:

Management Layer:

Security and Compliance:

Networking:

Scalability and Elasticity:

Fault Tolerance and High Availability:

COMPONENT OF CLOUD COMPUTING ARCHITECTURE

Working Model OF CLOUD COMPUTING

Deployment Models

|

| Deployment Models |

PUBLIC CLOUD

|

| PUBLIC CLOUD |

PRIVATE CLOUD

|

| PRIVATE CLOUD |

HYBRID CLOUD

Service Models

1.Infrastructure as a Service (IaaS)

Platform as a Service (PaaS)

|

| Platform as a Service (PaaS) |

Software as a Service (SaaS)

10 ADVANTAGES OF CLOUD COMPUTING

5 DISADVANTAGES OF CLOUD COMPUTING

Internet of Things (IOT)

Introduction

How IoT Works?

|

| How IoT Works? |

Life cycle of IOT

|

| Life cycle of IOT |

Compilation

Transmission

Interpretation

Execution

Actions are taken based on the information and data, such as sending notifications, communicating with other computers, and sending emails.

Components of IoT

|

| Components of IoT |

10 Application of IOT

|

| Application of IOT |

What is Big Data?

Introduction

Three Characteristics of Big Data

|

| Three Characteristics of Big Data |

Volume(Data quantity)

Velocity(Data Speed)

Variety(Data Types)

The Structure of Big Data

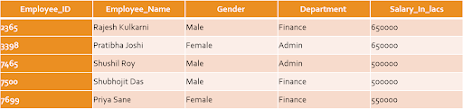

Structured Data:-

Structured data refers to any information that is capable of being stored, retrieved, and manipulated in a predetermined format. A prime illustration of structured data is an 'Employee' table found within a database.

|

| structured Big Data |

unstructured data

|

| unstructured Big Data |

Semi-structured

|

| Semi-structured Big Data |

Big data source

|

| source of Big data |

- Social media platforms.

- Internet of Things (IoT) devices.

- E-commerce transactions.

- Healthcare records.

- Financial transactions.

- Sensor data.

- Web traffic.

- Government databases.

- Mobile applications.

- Industrial machinery.

10 Application Of Big Data

|

| application of BIG DATA |

10 disadvantages of Big Data

- Privacy concerns.

- Security risks.

- Data quality issues.

- Costs and complexity.

- Overreliance on data.

- Legal and regulatory compliance.

- Data breach vulnerability.

- Bias and discrimination.

- Data ownership and control.

- Infrastructure limitations.

- Will be so overwhelmed:-

- Need the right people and solve the right problems

- Costs escalate too fast

- Isn’t necessary to capture 100%

- Many sources of big data is privacy

- self-regulation

- Legal regulation

10 Advantages/Benefits of Big Data

- Informed decision-making.

- Improved efficiency.

- Enhanced customer experience.

- Competitive advantage.

- Innovation and product development.

- Risk management.

- Real-time insights.

- Personalization and targeting.

- Healthcare outcomes improvement.

- Scientific advancements.

Conclusion

Frequently Asked Question(FAQ)

What is AI ?

AI, or Artificial Intelligence, is a branch of computer science dedicated to creating systems capable of performing tasks that typically require human intelligence.

what is Expert Systems ?

An expert system serves as a computer program engineered to address intricate problems and furnish decision-making capabilities comparable to those of a human expert.

What is Cloud computing ?

Cloud computing refers to the delivery of computing services over the internet, providing storage, processing power, and software. These services are available on-demand and do not require direct user management.

What is IOT ?

The Internet of Things (IoT) is an ecosystem that consists of interconnected physical objects or electronics. These objects are embedded with sensors and have the ability to collect and exchange data through the internet.

What is Big Data ?

Big data refers to large volumes of structured, semi-structured, and unstructured data generated from various sources such as social media, sensors, mobile devices, and enterprise systems.

Post a Comment